Learn more

Data has become the most valuable commodity to businesses in the 21st century. From start to finish, the success of every large corporation hinges on its ability to gather, process, manage, and utilize data.

To better utilize your data, read up on the most common data migration mistakes in our e-book:

Top Five Benefits of Adopting a Cloud Migration Proof Data Layer

Data pipelines have become pivotal to getting data from one place to another and in a way that’s readily available for analysis. Data pipelines eliminate most manual steps from the process, providing you with faster, more reliable insights that will propel you closer to achieving your objectives.

Below we’re going to unpack everything you need to know about data pipelines and how you can fully utilize data to give you a competitive edge over your competition.

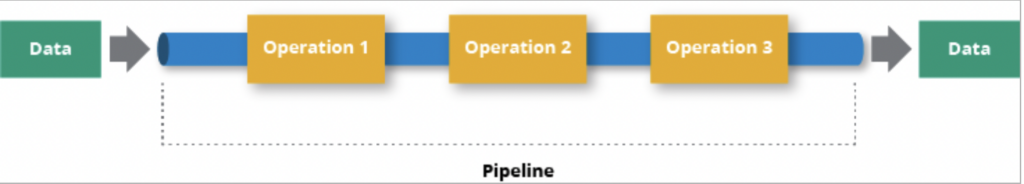

A data pipeline is several actions taken to take raw data from different locations to a place where it can be stored and analyzed. When data is gathered in its purest form, it may not be optimized for analysis. And so, a crucial aspect of a data pipeline’s utility is to transform this raw data in a way that’s primed for reporting and to be used to develop key business insights.

The data pipeline process can be broken down into three different stages.

The sources are essentially the locations from where data is gathered. These locations will include relational database management systems such as:

In many instances, data is ingested from disparate sources, then manipulated and translated based on the needs of the business.

The last stage involves transmitting the data to its intended storage location, which typically is a data lake or a data warehouse, for analysis.

Batch-based data pipelines are one of the most common types and these are deployed either manually or periodically. As the name suggests, they process data in batches. Under this architecture, there might be an application that creates a broad range of data points that needs to be transmitted to a data warehouse and a location for them to be analyzed.

Batch-based data pipelines operate under a cyclical process where each cycle is completed once all of the data has been processed. The time taken for it to complete a run depends on the size of the consumed data source, which can range anywhere between a couple of minutes to a couple of hours.

When active, batch-based data pipelines will place a higher workload on the data source. As a result, businesses tend to deploy them at times when user activity is low to avoid impeding other workloads.

They are typically used in situations where time sensitivity isn’t an issue, such as:

Below is an example of what a batch-based data pipeline would look like:

Living in a world where businesses generate unfathomable amounts of data every day, companies have looked to streaming data pipelines as a more optimal way of gathering, processing, and storing data. This is because streaming data pipelines operate continuously and the architecture allows them to execute millions of events at scale and in real-time.

They’re used in scenarios where data freshness is mandatory and where organizations are required to react instantly to market changes or user activity. So if for example, our goal is to monitor consumer behavior on a website or an application, the data will be based on thousands of events, each with thousands of users. This can easily amount to millions of new records each hour.

Should it be mandatory for an organization to respond instantly to changes seen in real-time – downtime on an app or a website for example – using a streaming data pipeline would be the only viable option.

Typical use cases of streaming data pipelines include:

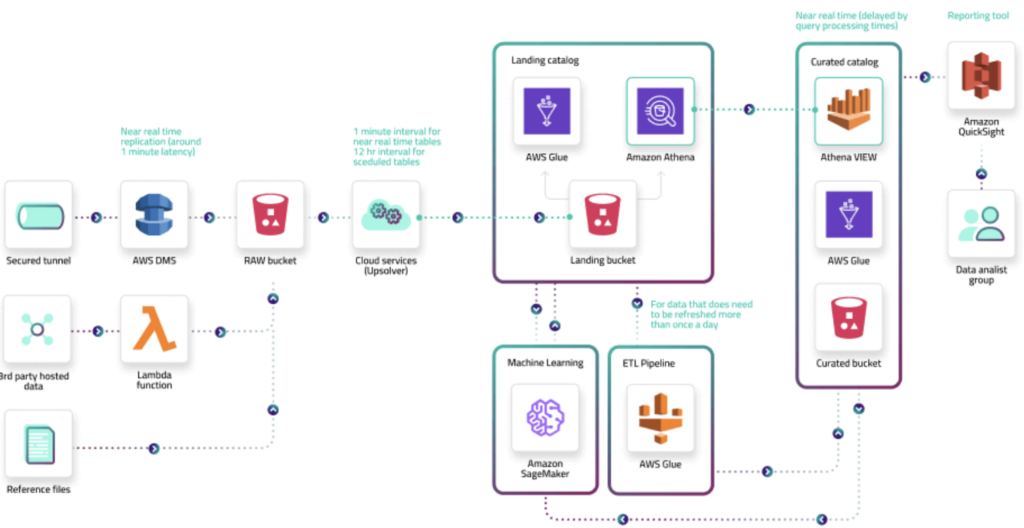

Below is an example of what a data streaming pipeline would look like:

ETL stands for ‘extract, transform, load’ and is a data integration process that makes data consumable for businesses to leverage. An ETL pipeline will allow you to extract data from one or many sources, transform it, then push it into a database or a warehouse.

They are essentially made up of three interdependent processes of data integration that are responsible for transferring data from one database to another. There are three fundamental differences between data and ETL pipelines.

The last stage of the ETL pipeline process is transferring the data into a database or a warehouse. This is different from data pipelines – it doesn’t always end with the loading process. With data pipelines, the loading can initiate new processes by activating webhooks in other systems.

Although data pipelines transfer data between different systems, they aren’t always involved in transforming it, unlike ETL pipelines.

ETL pipelines tend to be run in batches, where data is moved periodically in chunks. Conversely, data pipelines often operate as real-time processes that involve streaming computation.

It’s important to highlight that the data pipeline itself is a process for transferring data from the source to the target systems, whereas the data pipeline architecture is a comprehensive system that extracts, regulates, and connects data to other different components. This entire process typically comprises four steps:

Data pipelines can be broken down into repeatable steps that pave the way for automation. Automating each step minimizes the likelihood of human bottlenecks between stages, allowing you to process data at a greater speed.

Automated data pipelines can transfer and transform reams of data in a short space of time. What’s more, is that they can also process many parallel data streams simultaneously. As part of the automation process, any redundant data will be extracted to ensure that your apps and analytics are running optimally.

The data that you gather may likely come from a range of different sources which will contain distinguishable features and formats. A data pipeline will allow you to work with different forms of data irrespective of their unique characteristics.

Data pipelines will aggregate and organize your data in a way that’s optimized for analytics, providing you with fast immediate access to reliable insights.

Data pipelines enable you to extract additional value from your data by utilizing other tools such as machine learning. By leveraging these tools, you’ll be able to carry out a deeper analysis of your insights that can reveal hidden opportunities, potential pitfalls, and ways you can enhance operational processes.

Easy, simple to use, and effective, Astera can extract data from any cloud or on-premises source. With this tool, data is cleaned, transformed, and sent into a destination system based on your business needs. What’s more, is that you can do this within a single platform.

Hevo Data is a no-code pipeline that allows you to load data from different sources to your data lake in real-time. The tool is time-efficient and is designed to make the tracking and analyzing of data across different platforms as easy as possible.

The tool helps you to automate your reporting process. All that’s required is for you to connect more than 100 disparate data sources and examine them in different formats.

Integrate.io requires no code and transfers data from source A to source B through the ETL process (extract, transform, load). This user-friendly tool connects different data sources and destinations through connectors that require minimal (or no) code, thereby allowing you to shift critical business information from different sources for analytics.

Data source connectivity

You’re able to draw data from over 100 different sources with pre-built connectors. This includes the integration of SaaS software, cloud storage, SDKs, and streaming services.

Deploy seamlessly

It only takes a few minutes to set up a pipeline through their simple and interactive user interface. Deployment is easy and you’ll carry out an analysis to optimize data integration calls without hindering data quality.

Scales as data grows

You’re able to scale horizontally as the volume of data and speed increases. Hevo Data can take on millions of records per minute with low latency.

Redis is a real-time in-memory data platform that serves low latency, high-volume, highly scalable, and reduced-cost data ingestion, data processing, data, and feature serving. The whole point of a highly performant data pipeline is to serve data as fast as possible to services used by customers or even directly to customers. The RDBMS or even some NoSQL databases aren’t capable of providing the speed and performance of an in-memory solution like Redis so including Redis inside of your pipelines to enable real-time use cases is paramount.

In-memory speed

Redis Enterprise can process more than 200 million read/write operations per second, at sub-millisecond latency.

Cost-efficient storage

Redis on Flash which extends DRAM with SSD enables cost-efficient storage and allows ingestion of very large multi-terabyte datasets while keeping the latency at sub-millisecond levels even when ingesting more than 1M items/sec.

Data type flexibility

Redis is one of the most data pipeline-friendly databases out there because of all of the native data types like: